I’m the weird one in the room. I’ve been using 7z for the last 10-15 years and now .tar.zst, after finding out that ZStandard achieves higher compression than 7-Zip, even with 7-Zip in “best” mode, LZMA version 1, huge dictionary sizes and whatnot.

zstd --ultra -M99000 -22 files.tar -o files.tar.zst

Stage 2:

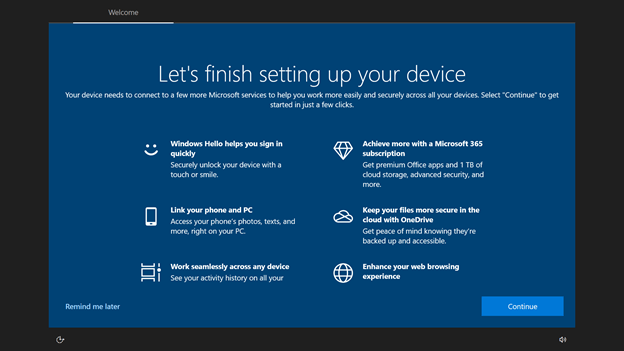

Documents folder? You want to rule my whole computer, dictate some nonsensical folder structure and then you act like, out of the goodness of your heart, I can have this little set of folders, deep in your weird structure, to store my stuff? And you’re even telling me how to sort it? On my own hard drive connected to my own computer?