- 4 Posts

- 152 Comments

Oh. I guess they could take the sky from me.

2·10 days ago

2·10 days agoThere used to be very real hardware reasons that upload had much lower bandwidth. I have no idea if there still are.

72·10 days ago

72·10 days agoPremium Lite is hilarious branding. “Oh, it’s high quality, but like, less. Quality Lite”

101·13 days ago

101·13 days agoYup. Communication requires effort on the part of the sender and receiver. I reject the premise that anyone needed convincing by the Dems. If simply seeing the candidates speak wasn’t enough, that portion if the electorate is not putting in the slightest effort to understand. There are many motivations for wilful ignorance. There were perhaps other explanations for Trumps win in '16. This time it’s just that America would rather have a convicted felon as a president.

2·14 days ago

2·14 days agoSensible Erection (reddit precursor) had to spin off a-whole-nother site, Sensible Election for the '04 (I think?) election and aftermath.

What got Trump elected is climate change increasing pressures in hundreds of domains, making people fearful and easy to manipulate. It’s happening the world over. Blaming whoever opposes Trump for not doing it quite right is another flavor of copium.

13·20 days ago

13·20 days agoJust for fun, this can be accomplished with a poorly shielded speaker/audio cable next to a poorly shielded CRT/monitor cable displaying a locally run LLM. And it will make you feel like a hax0r

You muzzled Appa?

1·24 days ago

1·24 days agoYeah, but they encourage confining it to a virtual machine with limited access.

5·24 days ago

5·24 days agoLogic and Path-finding?

2·26 days ago

2·26 days agoEight O’Clock whole beans fresh ground and percolated to perfection. chef’s kiss

389·26 days ago

389·26 days agoShithole country.

There’s always a lighthouse. There’s always a man. There’s always a city.

161·27 days ago

161·27 days ago

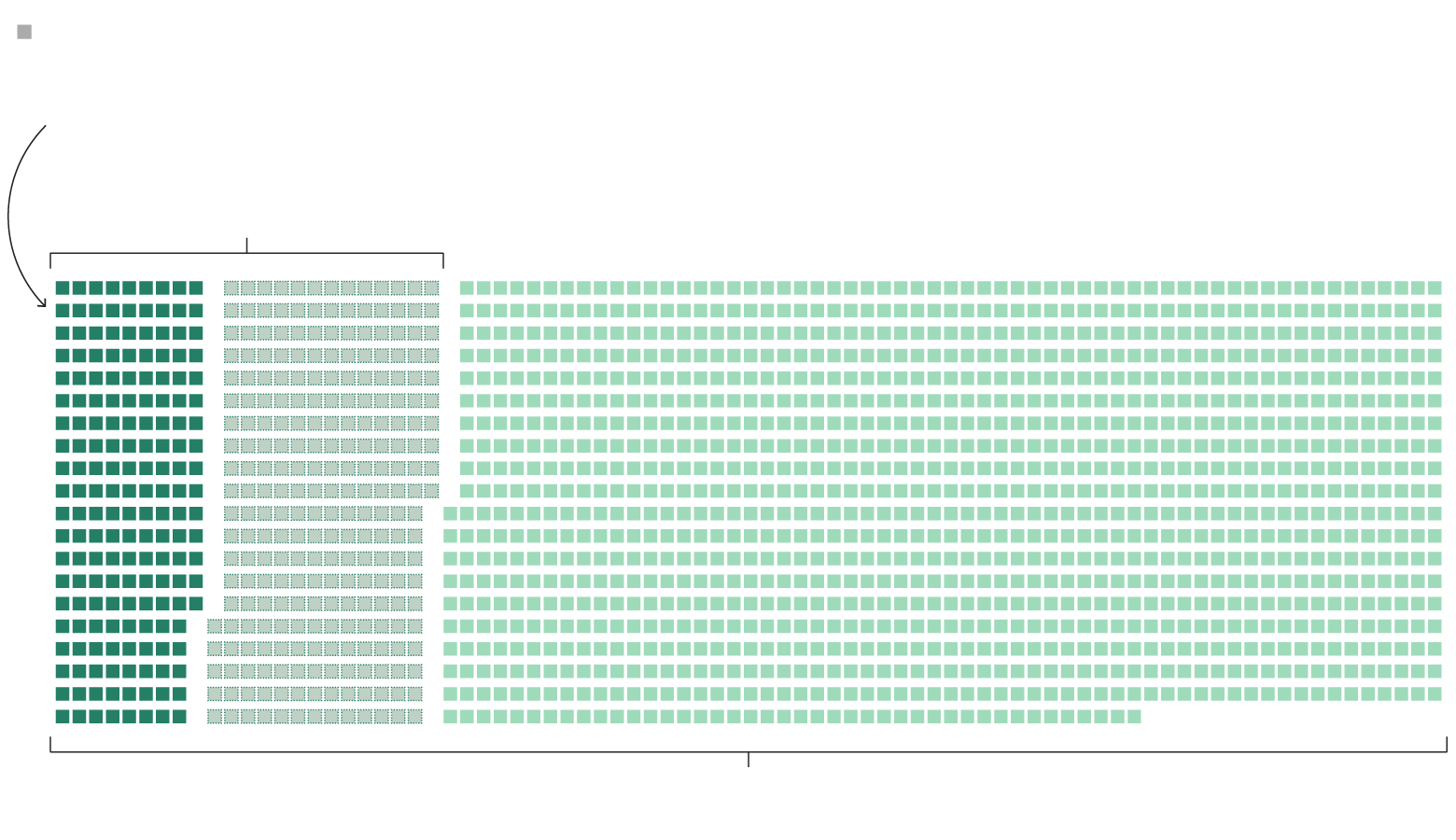

From left to right - Student loans forgiven ($175 Billion)

Student loans that would have been forgiven without R fuckery ($430 Billion)

Total federal student loan debt ($1.6 Trillion)

Yeah, using image recognition on a screenshot of the desktop and directing a mouse around the screen with coordinates is definitely an intermediate implementation. Open Interpreter, Shell-GPT, LLM-Shell, and DemandGen make a little more sense to me for anything that can currently be done from a CLI, but I’ve never actually tested em.

I was watching users test this out and am generally impressed. At one point, Claude tried to open Firefox, but it was not responding. So it killed the process from the console and restarted. A small thing, but not something I would have expected it to overcome this early. It’s clearly not ready for prime time (by their repeated warnings), but I’m happy to see these capabilities finally making it to a foundation model’s API. It’ll be interesting to see how much remains of GUIs (or high level programming languages for that matter) if/when AI can reliably translate common language to hardware behavior.

Can I blame Trump on 9/11 or something?

I think it’s more likely a compound sigmoid (don’t Google that). LLMs are composed of distinct technologies working together. As we’ve reached the inflection point of the scaling for one, we’ve pivoted implementations to get back on track. Notably, context windows are no longer an issue. But the most recent pivot came just this week, allowing for a huge jump in performance. There are more promising stepping stones coming into view. Is the exponential curve just a series of sigmoids stacked too close together? In any case, the article’s correct - just adding more compute to the same exact implementation hasn’t enabled scaling exponentially.